[Paper] [GitHub]

Introduction

This work is based on our paper, which is published in SENSORS. We proposed a novel synthetic dataset augmented on KITTI dataset for foggy weather conditions. You can also check our project webpage for a deeper introduction.

In this repository, we release code and data for training and testing our SLS-Fusion network on stereo camera and point clouds (64 beams and 4 beams) on both KITTI and Multifog KITTI datasets.

Abstract

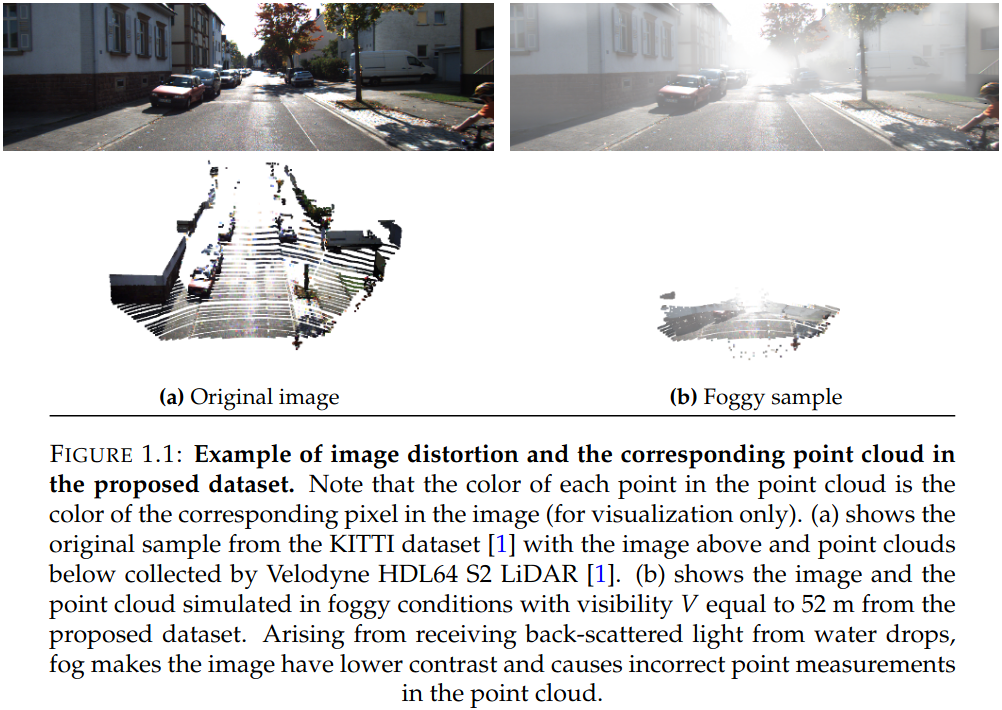

The role of sensors such as cameras or LiDAR (Light Detection and Ranging) is crucial for the environmental awareness of self-driving cars. However, the data collected from these sensors are subject to distortions in extreme weather conditions such as fog, rain, and snow. This issue could lead to many safety problems while operating a self-driving vehicle. The purpose of this study is to analyze the effects of fog on the detection of objects in driving scenes and then to propose methods for improvement. Collecting and processing data in adverse weather conditions is often more difficult than data in good weather conditions. Hence, a synthetic dataset that can simulate bad weather conditions is a good choice to validate a method, as it is simpler and more economical, before working with a real dataset. In this paper, we apply fog synthesis on the public KITTI dataset to generate the Multifog KITTI dataset for both images and point clouds. In terms of processing tasks, we test our previous 3D object detector based on LiDAR and camera, named the Spare LiDAR Stereo Fusion Network (SLS-Fusion), to see how it is affected by foggy weather conditions. We propose to train using both the original dataset and the augmented dataset to improve performance in foggy weather conditions while keeping good performance under normal conditions. We conducted experiments on the KITTI and the proposed Multifog KITTI datasets which show that, before any improvement, performance is reduced by 42.67% in 3D object detection for Moderate objects in foggy weather conditions. By using a specific strategy of training, the results significantly improved by 26.72% and keep performing quite well on the original dataset with a drop only of 8.23%. In summary, fog often causes the failure of 3D detection on driving scenes. By additional training with the augmented dataset, we significantly improve the performance of the proposed 3D object detection algorithm for self-driving cars in foggy weather conditions.

Download our Multifog KITTI dataset

The training and testing part of our Multifog KITTI datasets (SENSORS 2021) are organized as follows:

- Training (7,481 frames):

- [image_2] (.png, 4,3 GB)

- [image_3] (.png, 4,1 GB)

- [velodyne_64beams] (.bin, 10,7 GB)

- [velodyne_4beams] (.bin, 98 MB)

- [visibility_level] (.txt, 20m to 80m visibility level)

- Testing (7,518 frames):

- [image_2] (.png, )

- [image_3] (.png, )

- [velodyne_64beams] (.bin, 10,9 GB)

- [velodyne_4beams] (.bin, )

- [visibility_level] (.txt, 20m to 80m visibility level)

If you want to work on sparse LiDAR, please refer here for LiDAR 2 beams, LiDAR 4 beams, 8 beams, 16 beams, 32 beams based on LiDAR 64 beams (KITTI dataset).

Citation

If you find our work useful in your research, please consider citing:

@article{nammai_sensors_2021,

AUTHOR = {Mai, Nguyen Anh Minh and Duthon, Pierre and Khoudour, Louahdi and Crouzil, Alain and Velastin, Sergio A.},

TITLE = {3D Object Detection with SLS-Fusion Network in Foggy Weather Conditions},

JOURNAL = {Sensors},

VOLUME = {21},

YEAR = {2021},

NUMBER = {20},

ARTICLE-NUMBER = {6711},

URL = {https://www.mdpi.com/1424-8220/21/20/6711},

PubMedID = {34695925},

ISSN = {1424-8220},

ABSTRACT = {},

DOI = {10.3390/s21206711}

}

@inproceedings{nammai_icprs_2021,

author={Mai, Nguyen Anh Minh and Duthon, P. and Khoudour, L. and Crouzil, A. and Velastin, S. A.},

booktitle={11th International Conference of Pattern Recognition Systems (ICPRS 2021)},

title={Sparse LiDAR and Stereo Fusion (SLS-Fusion) for Depth Estimation and 3D Object Detection},

year={2021},

volume={2021},

number={},

pages={150-156},

doi={10.1049/icp.2021.1442}}

Contact

Any feedback is very welcome! Please contact us at mainguyenanhminh1996 AT gmail DOT com